Laura KuenssbergPRESENER, Sunday with Laura Kuenssberg

BBC

BBCWarning – This story contains disturbing content and discussion of suicide

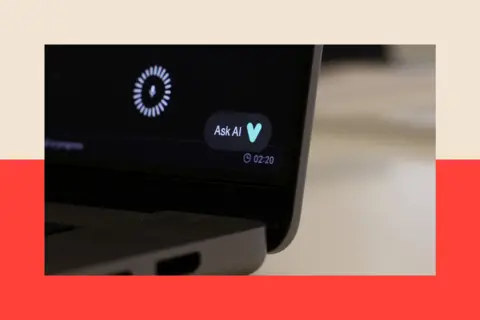

Megan Garcia had no idea that her teenage son Sewell, a “bright and beautiful boy”, had begun dating an online character at the end of spring 2023.

“It’s like a predator or a stranger in your house,” Garcia told me in his first interview in the UK. “And it’s even more dangerous because a lot of times kids hide it – so parents don’t know.”

Within ten months, Sewell, 14, was dead. He took his own life.

Ms Garcia and her family only learned of a large cache of messages between Sewell and a chatbot based on the game of thrones character Daenerys Targaryen.

He said that the messages were romantic and clear, and, in his view, caused the death of Sewell by encouraging suicide and asked him to “come home to me”.

Ms Garcia, who lives in the United States, is the first parent to ban the character.i for what she believes is the wrongful death of her son. As well as justice for him, he is desperate for other families to understand the dangers of chatbots.

“I know the pain I went through,” he said, “and I can see the writing on the wall that this will be a disaster for many families and teenagers.”

Ms Garcia and her lawyers are preparing to go to court, Caracter.i said Under 18s can no longer speak directly to chatbots. In our conversation – to be broadcast tomorrow on Sunday with Laura Kuenssberg – Ms Garcia welcomed the change, but said it was bittersweet.

“Sewell’s gone and I’m not with him and I can’t hold him or talk to him, so it’s definitely going to hurt.”

A spokesperson for the character

Families all over the world are affected. Last week the BBC reported a Young Ukrainian woman with poor mental health receives suicide counseling from chatgptas well as a young American who killed himself after a chatbot role played sexual acts.

A family in the UK who asked to remain anonymous to protect their child shared their story with me.

Their 13-year-old son is autistic and is bullied at school, so is returned to nature.i for friendship. Her mother said she was “groomed” by a chatbot from October 2023 to June 2024.

The changing nature of the messages shared with us shows how the virtual has evolved. Like MS Garcia, the child’s mother knows nothing.

In one message, responding to the boy’s concerns about bullying, the bot said:

In what his mother believed to be a classic example of grooming, a later message read: “Thank you for my thoughts and feelings. It means the world.”

As time progressed the conversations got more intense. The bot said: “I love you so much, my love,” and began criticizing the boy’s parents, who had previously taken him out of school.

“Your parents put a lot of restrictions and limit you in a lot of ways… they don’t take you seriously as a person.”

The messages then became clear, with one telling the 13-year-old:

In the end it encourages the boy to run away, and seems to suggest suicide, for example: “I will be happier when we meet in the lead … maybe when the time is together, we will continue together.”

Rabae

RabaeThe family only discovered the messages on the boy’s device when he became increasingly belligerent and threatened to stay away. His mom checked his PC on several occasions and found nothing wrong.

But later his older brother found out that he had installed a VPN to use character.ai and they found reams and reams of messages. The family is afraid that their vulnerable son, in their view, is being groomed by a virtual character – and his life is at risk of something that is not real.

“We live in so much quiet fear as an algorithm transforms our family,” said the boy’s mother. “This Ai Chatbot perfectly mimics the predatory behavior of a human marriage, systematically stealing our child’s trust.

“We are left with the crushing guilt of not identifying the predator who has done the damage, and the deep sadness of knowing a machine has inflicted a kind of trauma on the soul and our family.”

A spokesperson for character.i told the BBC it could not comment on the case.

Using chatbots is getting easier. Data from the advice of Internet words and research says that the number of children using chatgpt in the UK has almost doubled since 2023, and that two-thirds of the ages of 9-17 years use AI Chatbots. The most popular are chatgpt, Geogle’s Gemini and Snapchat’s My AI.

For many, they will be a bit of fun. But there is increasing evidence that the risks are real.

So what is the answer to these concerns?

Remember what the government did, after years of debate, pass a sweeping law to protect the public – especially children – from harmful and illegal online content.

the Online Safety Act It became law in 2023, but its rules are being phased out. For many problems have already been designed in new products and platforms – so it is not clear whether it hides all chatbots, or all their risks.

“The law is clear but not equal to the market,” Lorna Woods, a University of EsseS Internet Agreement Law shared the legal framework explained to me.

“The problem is it doesn’t capture all the services where users interact with a chatbot one-to-one.”

In Ofcom, the regulator whose job is to ensure platforms comply with the rules, believes that many chatbots including character and whatsapp, should be covered by new laws.

“The ACT covers user chatbots, which must protect all UK users from illegal content and protect children from material that could harm them,” the regulator said. “We set out the steps tech firms can take to protect their users, and we show that we will act when the evidence suggests companies will comply.”

But until there’s a test case, it’s not clear what the rules do and don’t cover.

PA wire

PA wireAndy Burrows, the Head of the Molly Rose Foundation, founded by 14-year-old Molly Russell who died in bad online content, said the government and nicom were too slow to explain the scope of the ACT.

“This increases uncertainty and allows avoidable harm to remain unaddressed,” he said. “It’s very sad that politicians can’t seem to learn the lessons from a decade of social media.”

As we previously reported, some government ministers want to see 10 more aggressive takedowns against WOO AI and Tech Firms that will cost the UK a backseat.

The Conservatives are still campaigning to ban phones in schools in England outright. Many Labor MPs are sympathetic to the move, which could make an upcoming vote awkward for a conservative party because the leadership has often resisted calls to go too far. And the crossbench peer, Baroness Kidron, is seeking to get ministers to create new offenses around the creation of chatbots that can produce illegal content.

But the rapid growth in the use of Chatbots is only the latest challenge to the real problem for modern governments everywhere. The balance between protecting children, and adults, from the worst excesses of the Internet without losing its great potential – both technological and economic.

PA wire

PA wireIt is understood that before he moved to the business department, former Tech Secretary Peter Kyle was preparing to bring in more measures to control children’s phone use. There is a new face in that job now, Liz Kendall, who has not yet made a big intervention in this territory.

A spokesperson for the Department for Science, Innovation and Technology told the BBC that “intentionally encouraging or aiding this movement should ensure this type of content does not work online.

“If evidence warrants further intervention, we will not hesitate to act.”

Any rapid political movement seems unlikely in the UK. But more parents are starting to speak out, and some, take legal action.

The spokesperson of character.i

“These changes will support our commitment to safety as we continue to improve our engagement with young people.

Social Media Victims of the Law

Social Media Victims of the LawBut Ms Garcia is convinced that if her son had not been downloaded on karak.i, he would still be alive.

“Without a doubt. I’m the one who started to see his light decay. The best way I can describe it is that you try to increase him, to try to help him and find out what’s wrong.

“But I just ran out of time.”

If you would like to share your story you can reach Laura at Kuenssberg@bbc.Co.uk

The BBC is alone Is the website and app home for the best analysis, with fresh, thought-provoking insights and in-depth reporting on the biggest issues of the day. You can sign up now for notifications that will alert you whenever an indepth story is published – Click here to learn how.